The Scale Problem

A cautionary note on generative AI and where it takes us

As technology scales, it's easy to forget that humans don't. This essay explores "The Scale Problem" and how it relates to the rise of generative AI. Informed by social media's ascent, looking at The Scale Problem can help us see through the hype and benefit from what's in store.

I can't shake a strong feeling of deja-vu—of the parallels between ChatGPT hype and the early days of the Internet. While we've just started with ChatGPT, its trajectory and influence will change society. Social computing facilitates mass connection and collaboration between people. ChatGPT, one example of generative AI, will do the same between people and machines.

Human evolution is no match for generative AI’s rate of improvement. The gap requires thoughtful consideration of its effects on us.

It's hard to fathom just a couple of decades ago, the Internet was still an exploratory medium. "Content was king." In 1997, "viral marketing" became a practice—but email carried messages opposed to video. AOL merged with Time Warner to create the world's largest digital newsstand. Netflix was founded to mail DVDs to people's homes.

These forays preceded a bright, albeit different future. Wired magazine projected "The Long Boom," an era of prosperity built on a social computing paradigm. This required a radically different perspective. The web represented more than replicating analog content in digital form. The new value created was through connection to others and the world's information.

Writing the Fragmented Future, Darcy DiNucci, a writer and user experience designer, coined the phrase "Web 2.0” to distance the movement from the dot-com collapse. Venture-backed entrepreneurs built out new technology infrastructure. Wikis, blogs, podcasts, and social networking sites revolutionized communication.

Web 2.0 proponents suggested as networked technology scaled, our lives would improve.

THE SCALE PROBLEM

By the mid-2000s, the "attention economy" took over. Our minds and time became the competitive ground for “monetization,” not to mention influence and power. Whether public attention was farmed for connection, media consumption, or social commerce didn't matter. Attention as a commodity, like oil or corn, became a highly valued resource. An information age gold rush was born.

A power shift followed. Attention—and influence—became an aggregation game. FAANG stocks (Facebook, Apple, Amazon, Netflix, and Google) generated trillions of dollars in market cap. Outlets like Huffington Post, Gawker, Buzzfeed, and Breitbart changed media dynamics. New influencers built new fandom factories aggregating views and likes.

Anyone could "go public" with their ideas for the first time, paid through a new form of compensation—social capital. In the process, those in positions of authority—like the press, clergy, politicians, and even doctors—saw confidence plunge.

The primary driver of this power shift had another cause—Scale.

"To scale" is to increase or expand in size, amount, or intensity. Companies fastest to scale often see the greatest spoils. Venkatesh Rao, editor of Ribbonfarm, captured the ethos of digital world builders. He said, "We tend to lionize "inventors," but the real heroes are probably the "scalers."

Methodologies on how to do so were codified. Sean Ellis first coined growth hacking in 2010 to describe a new approach to building companies. Hacking growth came by iterating and testing ideas to find the most effective and efficient ways to increase user growth. Startups popularized growth hacking, including Slack, Zoom, Twitch, Reddit, Tinder, and Dropbox.

By 2015, extreme scaling became the goal. Reid Hoffman, a partner at VC firm Greylock and the founder of LinkedIn, popularized "blitzscaling,” a set of practices for igniting and managing dizzying growth.

Hoffman found the secret to startup success wasn't a genius entrepreneur or visionary venture capitalist. Instead, winners develop capacities to grow extremely fast. He also noted software is a natural for blitzscaling. The more software became integral to all industries, the faster things moved. The idea was endorsed by Bill Gates (Microsoft), Sheryl Sandberg (Facebook), and Eric Schmidt (Google), among others. Business returns overwhelmed the human element.

“Blitzscaling” suggested as technology scaled, society would see benefits more quickly.

Here's the problem: Humans don't scale.

Our bodies, senses, and mental capacities can't adjust to the speed of technological improvement. The same applies to human-based institutions. Humans have a scale problem keeping up with a high rate of change.

I wonder if scale at all costs fuels adverse effects, a different type of "boom" of increased polarization, isolation, and failing mental health.

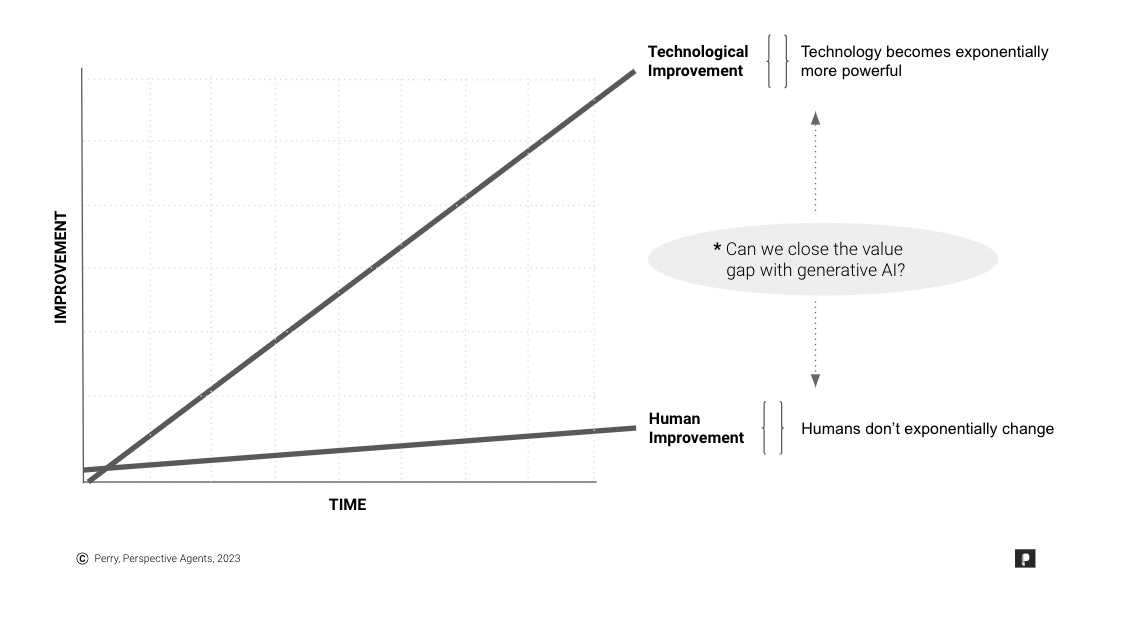

The simple visual below illustrates conflicts of scale between technologies and the people using them. Beyond just the scale and adoption of digital technologies, how do we improve the resulting value for human beings? Is it possible to raise human potential more quickly over time?

In an environment of fast-moving innovation, the relative "value" for tech platforms, users, and human organizations is at odds. In fact, the value gap is enormous.

SCALE AND GENERATIVE AI

As we leap into the next great tech/social experiment, it's hard not to have flashbacks to hype and oversights seen over the past two decades. Those in the know think generative AI is the most important innovation of our time. It may be as profound as the PC and the Internet.

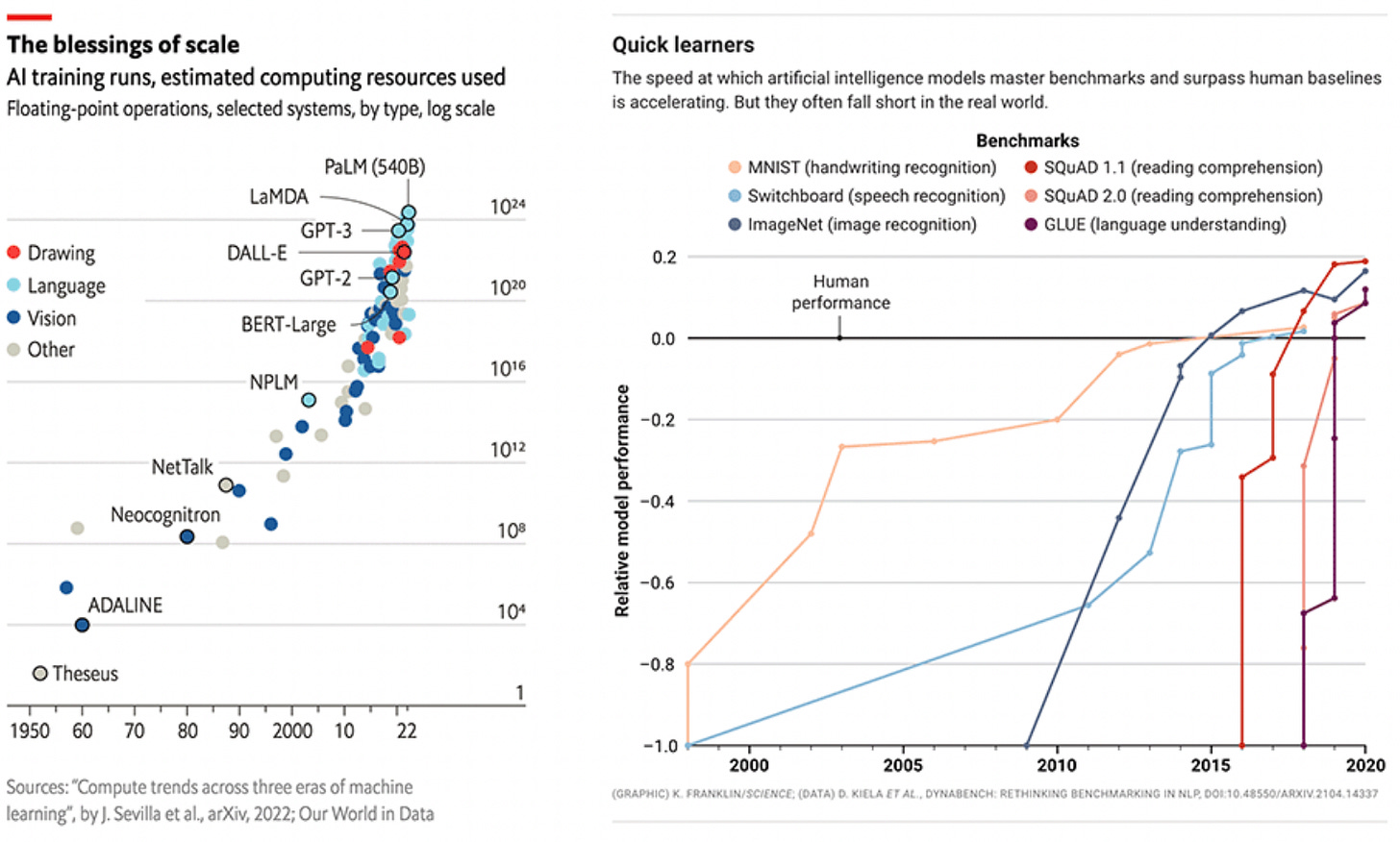

So the next tech gold rush is here. According to Pitchbook, VC investment in Generative AI has increased by 425% since 2020 to $2.1bn. The capital is chasing a rocket ship in flight. According to Sequoia, between 2015 and 2020, the computation used to train generative AI models increased by six orders of magnitude. Their results surpass human performance benchmarks in handwriting, speech and image recognition, reading comprehension, and language understanding.

As millions of users train ChatGPT's models, its computational prowess will continue to grow at a breathtaking rate. Whereas technological innovation was expected to double every two years, research teams see AI's capabilities doubling every six to 10 months. The current performance of generative AI is nothing compared to what it will be capable of soon. Moreover, it's impossible to foresee where it will take us over a longer horizon.

The question of prosperity must go beyond technical, financial, or productivity gains. Can more technology alone make life better? Can it make us more human? Attempts to answer human questions, exemplified by this tweet from the CEO of OpenAI, will be distorted by scale.

HUMAN EFFECTS OF SCALE

This technological progress and adoption rate is unprecedented. The same may be said about psychological and economic dilemmas arising in response to it. Writing for the Atlantic, Charlie Warzel raised important social questions, warning of a coming psychological meltdown he calls "AI Vertigo." Warzel says:

It’s hard not to get a sense that we are just at the beginning of an exciting and incredibly fast-moving technological era. So fast-moving, in fact, that parsing what we should be delighted about, and what we should find absolutely terrifying, feels hopeless. AI has always been a mix of both, but the recent developments have been so dizzying that we are in a whole new era of AI vertigo.

How do we emotionally, culturally, or politically prepare for what's in store?

To try to answer this question, I recently joined a think tank session to outline generative AI guidance and potential guardrails. The sentiment of the group—comprised of technologists, VCs, media scholars, and lawyers—was universally aligned. If we learn a lesson from social media's ascent, we must consider unintended effects through a humanist lens.

A “people agenda” must ascend over the shock and awe of new apps, constant upgrades, or big tech battles.

Humans can't improve at the rate of our technological innovations. Moore's Law allowed us to upgrade computing and digital connection. And as a result, we've gotten hooked on new digital gateways — a new device, network, or platform. Integrating AI into all aspects of our lives will evolve from something we hold or wear into something eventually embedded inside us.

What needs clarification is how we will cope with it all. And more opportunistically, the value AI offers to us.

A more digitally dependent lifestyle won't necessarily improve how we build and sustain relationships, find happiness, or improve satisfaction in our work.

There's still much to uncover in our understanding of ourselves and others and how we use these new powers. If recent history is any guide, we certainly can't count on our cultural evolution advancing at the same rate as technological progress.

So far, I’ve found ChatGPT to be an exciting tool for experimentation. It paints a picture for future computing. But practically speaking, it’s nothing but surface. So far, ChatGPT has done little to make me more informed and better at what I do. General use search gives the illusion of thinking. It's just that—an illusion—and a dangerous one right now to think that AI alone is the answer.

While pundits debate generative AI as the prelude to AGI, there's immediate use in weak AI. The benefits come from matching the best perspective with the best agents to inform thinking and output. Combined, the ability to scale knowledge and learning will be a real possibility, provided it starts with a focus.

To illustrate the point, I've used various AI-assisted tools over the past two years to create a knowledge and thinking platform. The current range of apps supported by AIs span:

Kindle — to read and annotate books and research papers.

Reader — a new aggregator incorporating RSS, PDF editing, video and audio transcription, Chat GPT-powered summation, and article queries

Readwise — a sister app of Reader that houses all my highlights, along with a feed that surfaces essential passages in daily email and newsfeed.

Roam — an aggregation and work platform for thinking and revisiting ideas, research, and task lists.

This "stack" helps me automate and organize input and ideas (more on its construction here). I use it to inform and optimize my research and writing of my Perspective Agents book. Combining these "tools for thought" is remarkable for my productivity and self-improvement. It is only possible to progress with them.

I hope that more of the discourse around generative AI will focus on human needs and value-driving experiments over new, exponentially more powerful technologies in search of a problem (and riches that come with mass adoption).

A human-centric focus versus "blitzscaling" AI can help bridge the value gap. In the process, it may help to dial down the hype, distraction, and delusions from AI vertigo looming on the horizon.

Intriguing and very well written; thanks for taking the time to share this.