Knowledge Keepers

How to build a search engine from "expert agents"

“Minds are made from many little parts, each mindless by itself.”

— Marvin Minsky, artificial intelligence pioneer

Skepticism of intellectual authority runs deep in most of us. To expand your horizons, distrust of expertise can be a helpful instinct. It's different when you outright reject it due to ideology or overconfidence.

Psychologists call this type of self-assurance the Dunning-Kruger Effect. It's the belief that one's knowledge is much greater than reality.

Who to follow for expert knowledge today compounds the problem. Tom Nichols predicted the gravity of the challenge in his book The Death of Expertise. He says we're so profoundly influenced by the swirl of opinion and division we've become an uninformed public. In the "age of intelligence," our collective IQ is somehow plummeting.

The issue stems from a "source crisis." How do you pull insight from an infinite haystack of information? What makes up a credible source to trust? How do we feel more informed on issues that matter to us?

Building a system can alleviate the challenges and level up your intelligence.

Marvin Minsky, the legendary co-founder of the AI Laboratory at MIT, has a theory to consider. Minsky thought of the human mind as a"society" of tiny agents that are themselves mindless. True insight only comes from unique combinations of them. This theory of natural intelligence, "society of mind," informed the creation of artificial intelligence.

The data that powers the Internet operates in a similar way. Think about pre-and post-Internet authority.

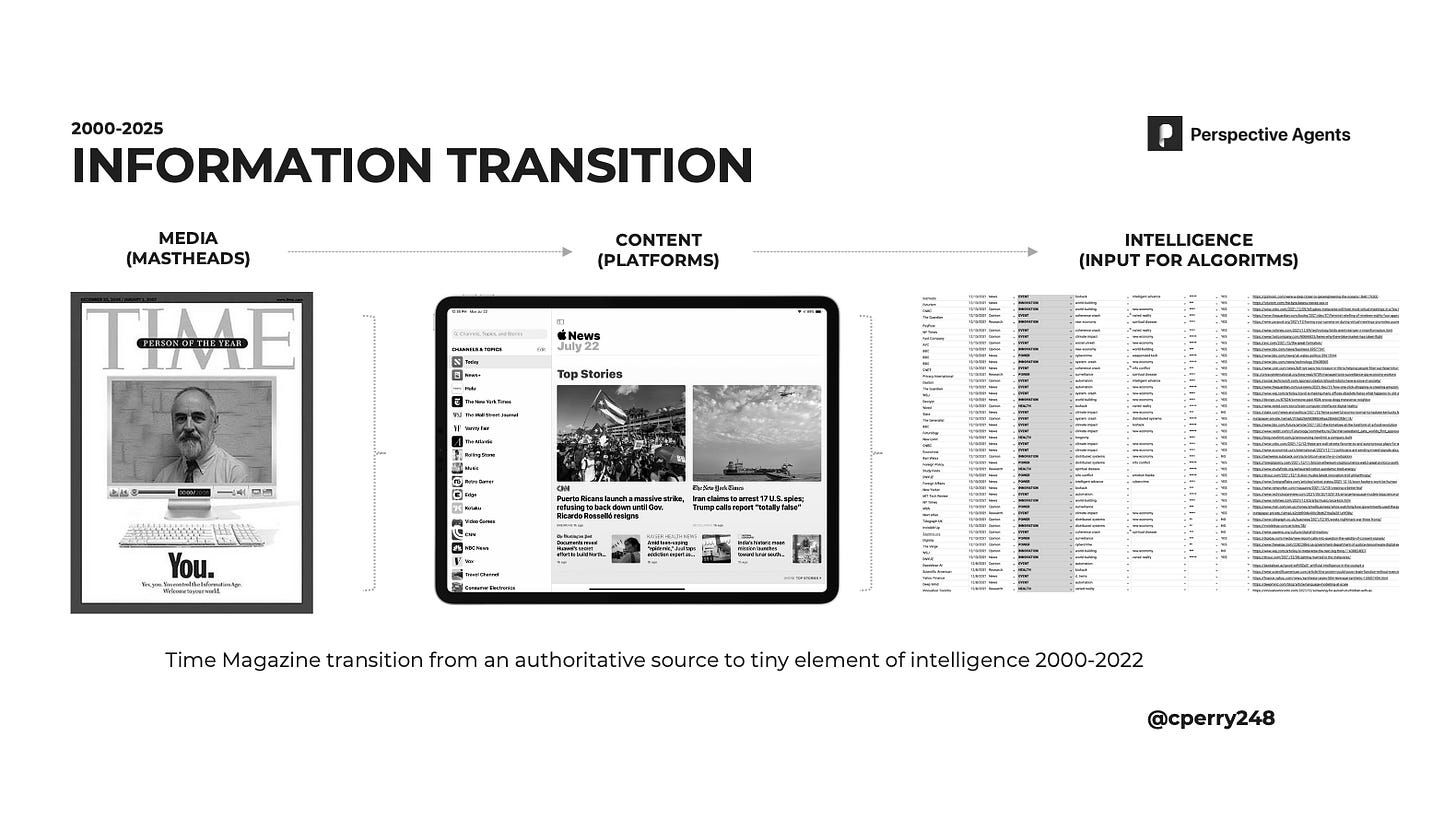

Time Magazine was once the pre-eminent source of world affairs. Now Time content sits alongside hundreds of other titles on newsreaders like Apple News. Beyond any single platform, a Time story is a small agent in a database with billions of other links.

Even if a Time story were to remain authoritative on its own, someone else's algorithm decides if we see it. And that system works as a high school caste. We know newsfeeds reward popular or emotional content over substance. Audience reaction to the pithy, shiny, or outrageous begets distribution. The more reaction, the more we see.

It leads to a stock of real expertise and intelligence mashed up with an ample supply of bullshit.

A simplified illustration of our information supply is shown below. At the bottom of the stack is easy-to-produce opinion. More rigor, regulation, and analysis are necessary as you move up the stack. If your bias is towards the bottom of the chain, you're more likely to be misinformed or confused.

If algorithms favor attention over understanding, what’s the solution?

For now, it's on us to figure it out. If you care enough to be better informed, you need to put some work in. It's not as heavy a load as you might think.

The rest of this post documents a personal experiment.

It's a build of an alternative information system suited to my needs.

First off, for any such system to have value, it needs a diversity of inputs. I think of it in the context of personal networks. The more diverse the network, the more valuable it becomes. The same applies to information. A range of perspectives helps distill the essence of the issues.

Since I lead an R&D group so my needs are broad. To see new opportunities and risks as clearly as possible I need a lot of data. The system aggregates subscription intelligence, forecasts, academic research, media coverage, books, and discussion notes. A suite of connected apps helps me process, organize and help make sense of what's coming in.

This process is 80 percent automated. And now that it's set, the feeds build more profound knowledge over time. By fusing this data into a single system, I’m now 10X more informed about the issues relevant to my work and life.

I don’t need to rely on the news, Google, or any other single source to sense what’s up. Without knowing it, over time the experiment generated a self-sustaining, personal search engine.

How might you approach building something similar?

Mindset. Having a curious, open mind about what to include is necessary to start. In particular, that means loosening the grip of conviction on who or what to trust.

Framework. What topics are most relevant to your work and well-being? Mine includes twenty topics likely to reshape society over the next twenty years. The framework informs my perspective through the evidence more than opinion. It also significantly decreases the time spent finding sources of insight I need.

Tools. Knowledge management apps help find, process, and distill your information supply. Kindle, Feedly, Instapaper, Readwise, and Roam do most of my information processing. Courses like "Building a Second Brain" can help set up yours. The apps are cheap, if not free, and easy to set up.

This is a "sketch" of my system process works, starting from the framework to refining the engine.

Synthesis. Reading, highlighting, and commenting on content further refines your engine's "source code." Summation of essential elements aids the retention and retrieval of new concepts. The technology facilitates the retrieval of insights relevant to you at the moment. Most importantly, the connections between nodes help unlock new ideas and developments.

This is what the network of intelligence looks like in Roam.

Community. Going it alone, even with automated help, is insufficient. Forums, writer collectives, fellowships, and cohort-based courses help build new knowledge with others. Coordinating varied perspectives into a consensus view compensates for a single interpretation. Experts in territories that matter to you can help.

If you don't have a system, you're at the mercy of sources with different incentives than yours. If you care about changes in the world, you must create something to distill meanings from them. If you’d rather live your life than constantly chase down information critical to you, you will need a platform like this.

My experiment is part of the growing personal knowledge management (PLM) movement. A growing cadre of knowledge keepers is building perspective-enhancing systems.

In the references below, you can get to know some of them to build your own.

SOURCES REFERENCED IN THE POST

Death of Expertise: Tom Nichols (YouTube summary)

Society of Mind: Marvin Minsky (book)

Building a Second Brain: Tiago Forte (cohort-based course)

Write of Passage: David Perrell

PKM: Subreddit

Feedly: Newsreader

Instapaper: Content archive

Readwise: Book notes and article archive

Roam: Digital notes and search

PERSPECTIVE ALTERING STUFF

Most Americans say COVID is no longer a crisis. Men consistently overrate their own intelligence. Over 15% of the world's population deal with daily headaches. The newest psychedelic drug is…sound? 20 modern heresies. The beginning of the end for the dollar? The U.S. State Department launched a cyber bureau. 3D printed homes to become the Tesla of housing. A theory of why we’re all going nuts online. TikTok brain explained. Film critics are no longer in sync with audiences. A driverless car got pulled over by the cops. Chipotle tests tortilla chip-making robots to combat labor shortages. The EU wants to make all salaries transparent. MIT graduate students vote to unionize. Instagram to become an NFT marketplace. More evidence that psychedelics treat depression. New cancer-preventing vaccines to wipe out tumors before they form. This biotech plans to delay menopause by 15 years. Diabetes treated using ultrasound in preclinical study. DALL·E. Is. Amazing.

FINAL NOTE

An intellectual of the highest order, Marvin Minsky was a skeptic of authority. In this interview he said:

“In general, I think if you put emphasis on believing a set of rules that comes from somebody who's an authority figure, then there are terrible dangers. Most of cultures exist because they've taught people to reject new ideas.”

Being skeptical, Minsky pushed the boundaries of computing from a glorified adding machine to becoming the most powerful amplifier of human knowledge in history.

Stay safe, will see you next week.

- CP

Perspective Agents are things that push the boundaries of thought. You can also find automated updates and new examples on Twitter. Thanks for the read.

I think you perfectly nailed it here with your point that algorithms favor attention over understanding. This is both true, and at the same time, such a lost opportunity. Because algorithms aren’t inherently “good” or “bad”. They are a fruit of our efforts to shape them in one direction or another. But the problem is, we are rarely in control of those algorithms.

This is one of the things we are going to try and prove with Groupd (https://groupd.co) - that shaping new algorithms for content understanding can be a powerful tool in the hands of tomorrow’s journalist, researcher, or busy knowledge worker. There is so much content out there coming out every second that trying to make sense of it on your own can be an unsurmountable hurdle. This is where we can let AI help us - by learning how we distinguish between content items, it can then do this task for us, transparently, and without the unnecessary focus on attention or other engagement hooks.

We are not live yet, but we are very much looking for our initial group of early adopters. I think that there is no place to seek smart individuals than among your audience, Chris.

Great topic this week Chris. I feel like all the new sources I have are one giant cul-de-sac recycling the same stories on a daily basis. I would love to engage in a different form of educational content. Getting tired of reading about who the Lions will select with the second pick in the NFL draft, how much I need to retire and what is the best tequila.