The Missing Multiplier

What masters of the universe said about AI this week — read this to the end.

Here’s the word from the World Economic Forum this week: AI is now the most consequential issue on the global agenda. Above geopolitics. Above climate. Above the media hurricane around Trump.

When the world’s most powerful leaders, investors, and executives gather in the Swiss Alps, they’ve decided this matters most. And two of the primary architects of AI said the quiet part out loud. You should pay close attention to them:

Yesterday, Anthropic CEO Dario Amodei projected “Nobel-level” AI intelligence and the total replacement of software engineers within 6 to 12 months. Google DeepMind CEO Demis Hassabis put 50% odds on human-level AI by 2030.

Both warned of a “lag” in labor statistics that masks a work crisis already in motion.

To stress: these are the main actors building AI systems — they see the power that the public has no view into.

AN OPERATING BUBBLE SEEN FROM THE TOP

Even then, here’s the problem: Davos conversations are atmospheric and directional, not operational (grounded in the daily realities we live in).

I’ve done the Davos trips and see what happens when executives return. Lots of fire, plenty of directives. Then, beyond fire and brimstone, few have clear ideas on what to actually do. In this case, they’ll need to figure it out—fast.

The ROI/working value to date tells a brutal story.

PwC's 29th Global CEO Survey provided a reality check for executives. 56% of CEOs reported seeing no financial benefit from AI to date. Not underwhelming returns. No returns. That’s quite remarkable, given that AI had a similar placement on the WEF agenda last year.

The most powerful technology in human history, and we’re capturing a fraction of its value, if any.

A VIEW FROM THE GROUND

This isn’t a technology problem. It’s a human problem. Or, put by Mohamed Kande, PwC’s chairman, “most leaders have forgotten the basics” on AI strategy.

I have eight years of experience running AI labs focused on human potential and risk. Our team translates the read into the basics (meaning actions to take).

One conclusion:

No system exists to help people understand, think, and build with AI to create real value.

The models, platforms, and compute are there.

Here’s what’s not: the personal and organizational approach to make it work.

The training programs aren’t enough. The pilots don’t scale. The tools don’t fit how people get work done in the trenches. The champions burn out. The gains evaporate.

Everyone is investing in AI capability. Few are investing in human readiness.

At Andus Labs, we call this systemic gap the Human OS for AI.

It’s not software. It’s the organizational operating system — people, workflows, rituals, incentives, governance — that sits between AI capability and actual value.

Without it, the math doesn’t work. Capability times zero equals zero. With it, organizations unlock the multiplier. The same investment yield can yield a 2x, 4x, or 8x return.

People are the unlock. And few—if any—are building human capacity and org design to address it.

At Andus Labs, we’re mobilizing some of the best organizational architects, change leaders, and AI engineers to crack the code on unrealized potential.

ACTING ON THE SITUATION

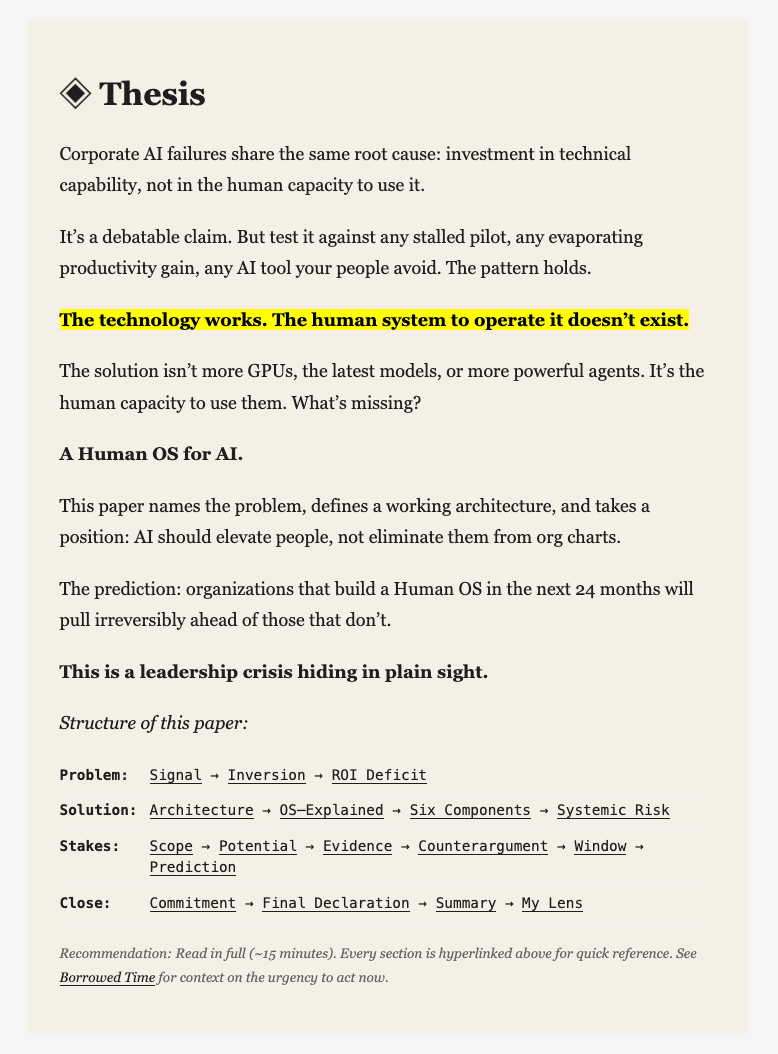

I just wrote a manifesto on the issue and this missing link. It names the problem, defines the architecture, and provides ways to act. It also predicts what happens next.

If you want to understand what’s actually at stake — not the Davos version, the operational and personal version — read it.

If you want to engage with AI in a way that creates value instead of theater — read it.

If you want to protect your career and take it to unimaginable heights— read it.

Jamie Dimon warned this week that AI may move “too fast for society.” Anthropic’s Amodei maintained his prediction that up to half of entry-level white-collar jobs will disappear in the next 1-5 years. The warnings could fill another newsletter.

There’s no more time for reflection. Act now.

The part that should concern everyone building a career: if AI handles entry-level work, how do juniors develop the skills to become seniors? The apprenticeship model is broken. The ladder is being pulled up.

What most haven’t recognized yet: the ladder doesn’t stop at entry-level. Middle management is next. Eventually, the executive suite.

I’m convinced AI-native workers will rise — new ladders will form. The AI-ambivalent are screwed.

The Human OS isn’t just an organizational problem. It’s a career problem. Yours.

Read the paper to broaden and deepen your understanding of what’s happening and what’s missing. The thesis is summarized below.

Go to The Human OS for AI —> https://humanreadiness.org/

The conversation at Davos is important. But it won’t tell you what to do tomorrow morning.

This just might.

Every post has a comment button. Most don’t deserve it. This one does. Read the manifesto. Think about what’s at stake. Then, in the comments, tell other readers what you see.