Last night’s Super Bowl drew an estimated 110+ million viewers, eight large to enter the building, and $7 million for a thirty-second spot. It featured two iconic franchises going into overtime. “Mr. Irrelevant” playing like a near-champ. Patrick Mahomes entering Tom Brady territory. And the clip seen around the world—Taylor Swift swinging’ drinks upstairs. It’s the last time a scene like this will be experienced in only two dimensions.

Technologically speaking, we’ve come a long, long way since Super Bowl I. NBC and CBS both broadcast the first game. Five cameras in total were employed to capture it. Video replay was so new networks labeled it “Video Tape” so viewers could sense what they were looking at. Steve Sobol from NFL Films said the production crew could’ve fit in the back of a station wagon. For years, there was no archive of the broadcast. Networks recorded soap operas over the game tapes. There were no VCRs to save it.

Five-seven years later, the Super Bowl is the signature multimedia event in a year-long NFL spectacle. For 272 regular season games and playoff contests, networks ensure games are experienced from all angles, with replays and backstories analyzed seemingly 24 hours a day. Each game is a significant production, with broadcasters deploying 12 to 20 cameras and 150 to 200 employees for regular-season contests.

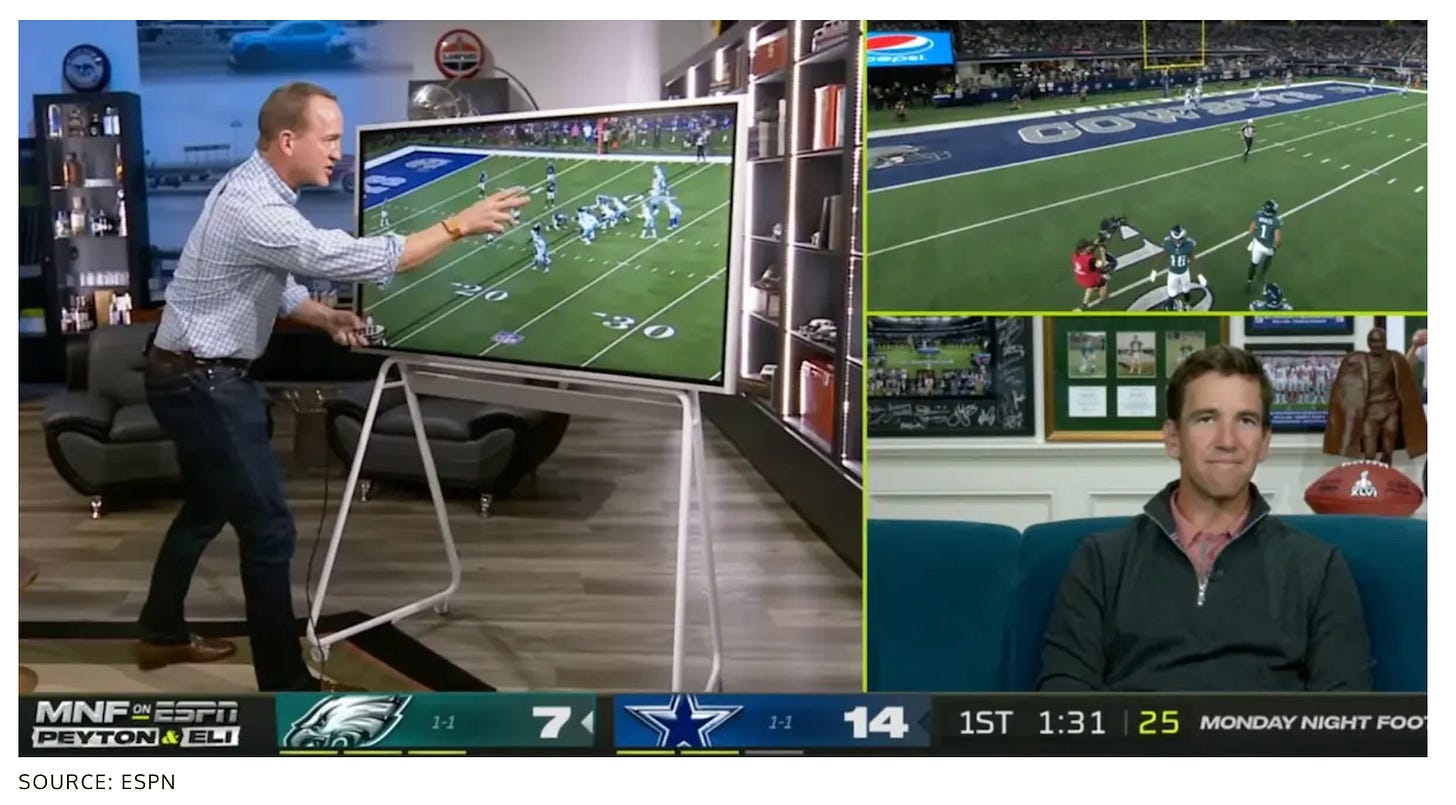

New formats are invented in and around the games, like ESPN’s Manning Cast, where we watch others watch it. Or Nickelodeon extending the presentation to kids with cartoon characters and overlays. But what revolutionary leaps can now be made moving from 2D mediums to 3D?

A New Way to Experience Sports

Immersive worlds like Apple’s spatial computing medium will make this year’s broadcast seem as antiquated as the first Super Bowl broadcast in January 1967.

Mind you, it was possible to watch last night’s game via Vision Pro. It was an ultra-sharp experience streamed to a giant virtual screen with hi-def audio. The game wasn’t produced in Apple Immersive Video. When it does, it will take us to lots of new places.

Imagine a scenario where micro drones and tiny cameras deliver a comprehensive spatial view from overhead, the ground, and the players’ perspective. It would extend the “extreme realism” found in Madden 2024 into NFL broadcasts, revolutionizing how fans experience the game.

Picture moving beyond pylon cameras enclosing the end zones, introducing cameras in the first-down markers or robotic stands. It would allow viewers to see the game from the line of scrimmage at ground level, mimicking the exclusive access currently reserved for those holding field passes.

Envision a player aspect, moving from sideline observation to immersive on-field presence. Imagine seeing the game through the eyes of a quarterback, linebacker, or referee. With seven on-field officials and twenty-two players in action, equipping them with cameras would provide a myriad of hyper-real perspectives on every play. Add to that a micro camera embedded in the football, a viewpoint of being carried and passed down the field.

Lastly, think about expanding Nickelodeon’s kid-centric broadcasts, allowing individuals to incorporate AR characters and overlays into personalized shows.

Cameras could number in the hundreds. AI production assistants may enable editing from a vast network of game views. AR overlays would provide more engaging data, insights, and images. Bespoke experiences will eventually be tailored to what fans sign up for.

It all right now seems technically unfeasible, but we couldn’t have predicted what’s capable on Vision Pro and what might be soon (though Nickelodeon’s broadcast last night gives us a partial view).

New Experiences Around the Game

Given its ownership of a new medium, Apple could become the exclusive spatial partner for the league. They earn it by co-creating a completely new experience, combining all the elements above with new upgrades and content exclusives delivered to headsets.

NFL Films, renowned for its iconic documentary storytelling, could take pre-game storytelling and hype into new dimensions. Programs like Hard Knocks may expand spatially, transporting viewers into players’ homes, coaches’ rooms, and the intensity of practice fields as if we’re personally there.

For promotions, the Super Bowl Half-Time Show could be transformed into an event specifically designed for Vision Pro users. We could join artists during practice sessions, sit in on interviews, stand on stage at the game, and partake in post-game celebrations.

The retail experience at the NFL Shop may also evolve into a fully 3D world, enabling fans to easily browse and purchase real and digital merchandise featuring our favorite teams and players. These buys could be made with a simple touch via Apple Pay, redefining how fans engage with sports memorabilia.

In the future, sportsbooks will take on new forms, moving beyond the traditional confines of casinos to a virtual arena integrated within or alongside broadcasts. Draft King’s betting data, odds, and props might be displayed within the viewing experience, further turning the game into a participatory event.

Use cases for new technologies like spatial computing are first developed with previous mediums in mind—in this case, experiences created for TV and mobile phones.

New ones require leaps of imagination we can’t fully comprehend with a week-old medium. There’s no telling where spatial experiences can take us. But no doubt, it will change our perspective on the Super Bowl and other events we love.

Perspective Agents has been recognized as an Amazon Best Seller and #1 new release in computers and technology, AI & semantics, and social aspects of technology.

For those who purchased the book, thank you. If willing, I’d be grateful if you posted a review on Amazon or wherever you picked it up. It adds to the credibility and chances others will find value from it.

Thanks for reading.